eric.cytu@gmail.com

Chun-Yi Tu

I'm a MS student at UC San Diego majoring in Computer Science. With hand-on skills in web development and 3 years of experience in Machine Learning, I am passionate about making impacts by creating useful applications. Here's my resume.

Experience

Software Engineering Intern

Verizon Media - Sunnyvale, CA

- Automated the publishing of models for Tensorflow Serving using CI/CD pipeline with Chef

- Reduced the model deployment time for Yahoo news feed by over 90% using dynamic model loading

- Designed a RESTful web service to validate models and monitor server status with Java Jetty

Software Engineering Intern

Dragon Cloud Artificial Intelligence - Taipei, Taiwan

- Developed a speaker recognition pipeline and API to authenticate users for mobile and IOT devices

- Created an interactive dashboard to visualize model performance and monitor usage for troubleshooting and improvement

- Integrated traditional speech processing methods with deep learning to increase verification rate by 15%

Research and Teaching Assistant

Computational Learning Lab, National Taiwan University - Taipei, Taiwan

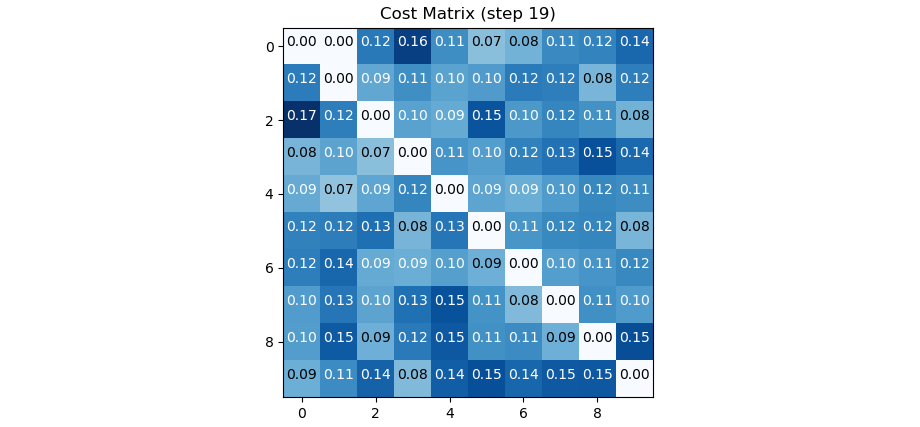

- Designed an optimization technique for Cost-Sensitive Learning in Multiclass Imbalance Problem

- Increased average multi-class recall by 20% using Reinforcement Learning (PPO) in TensorFlow.

- Received College Student Research Award, given to only one project in the Department of Computer Science

- Held office hours to provide individualized help for more than 200 students in the Machine Learning course

Education

University of California, San Diego

M.S. in Computer Science and Engineering

GPA: 4.0/4.0

Courses: Natural Language Processing, Recommender Systems, Virtualization,

Compiler Design, Computer Vision, Convex Optimization

National Taiwan University

B.S. in Computer Science and Information Engineering

GPA: 4.14/4.30; Rank: 10/123

Courses: Algorithm Design and Analysis, Digital Image Processing,

Video Communication, Machine Learning, Computer Architecture,

Computer Network, Operating System

Skills

Programming: Python, Java, C/C++, JavaScript, Haskell, SQL, MATLAB, HTML/CSS

Technologies/Frameworks: Docker, Git, AWS, TensorFlow, React, Node.js, Express, Django, REST, MVC, Ajax, Linux Kernel

Database: MySQL, PostgreSQL, MongoDB

Projects

Neural Artist [website]

Designed a web application for image stylization and a platform for users to share images and write posts using Node.js, Express, and MongoDB. Used fast style transfer in Python and TensorFlow to transform uploaded user pictures into artistic images.

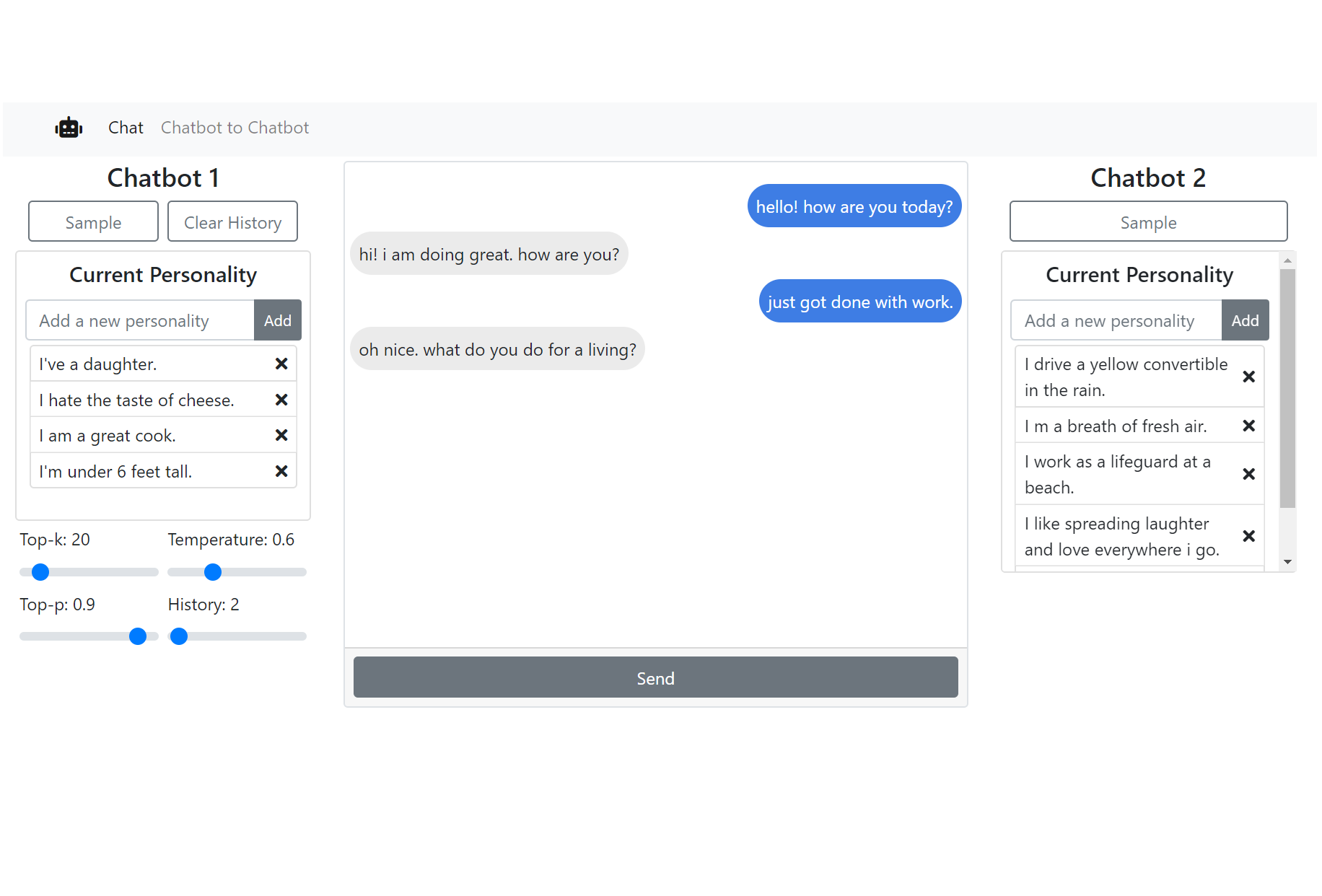

Persona Chatbot [website]

Developed a web application using Django for users to interact with the chatbot and define its personality. Used Transfer Learning and a Transformer model to develop a chit-chat Chatbot that shows a consistent personality in the conversation.

Cost Learning Network [paper]

Used Reinforcement Learning (PPO) in TensorFlow to learn an optimal cost matrix to improve Cost-sensitive Learning on Class Imbalanced Problem. Increased average multi-class recall by 20%, compared with the state-of-the-art Cost-sensitive Algorithm

Goodreads Rating and Read Prediction on Kaggle

Ranked 8th out of 423 people with 1.099 MSE on rating prediction, and 73.32% accuracy on read prediction. Exploited implicit feedback by maximizing the probability of relative preference prediction

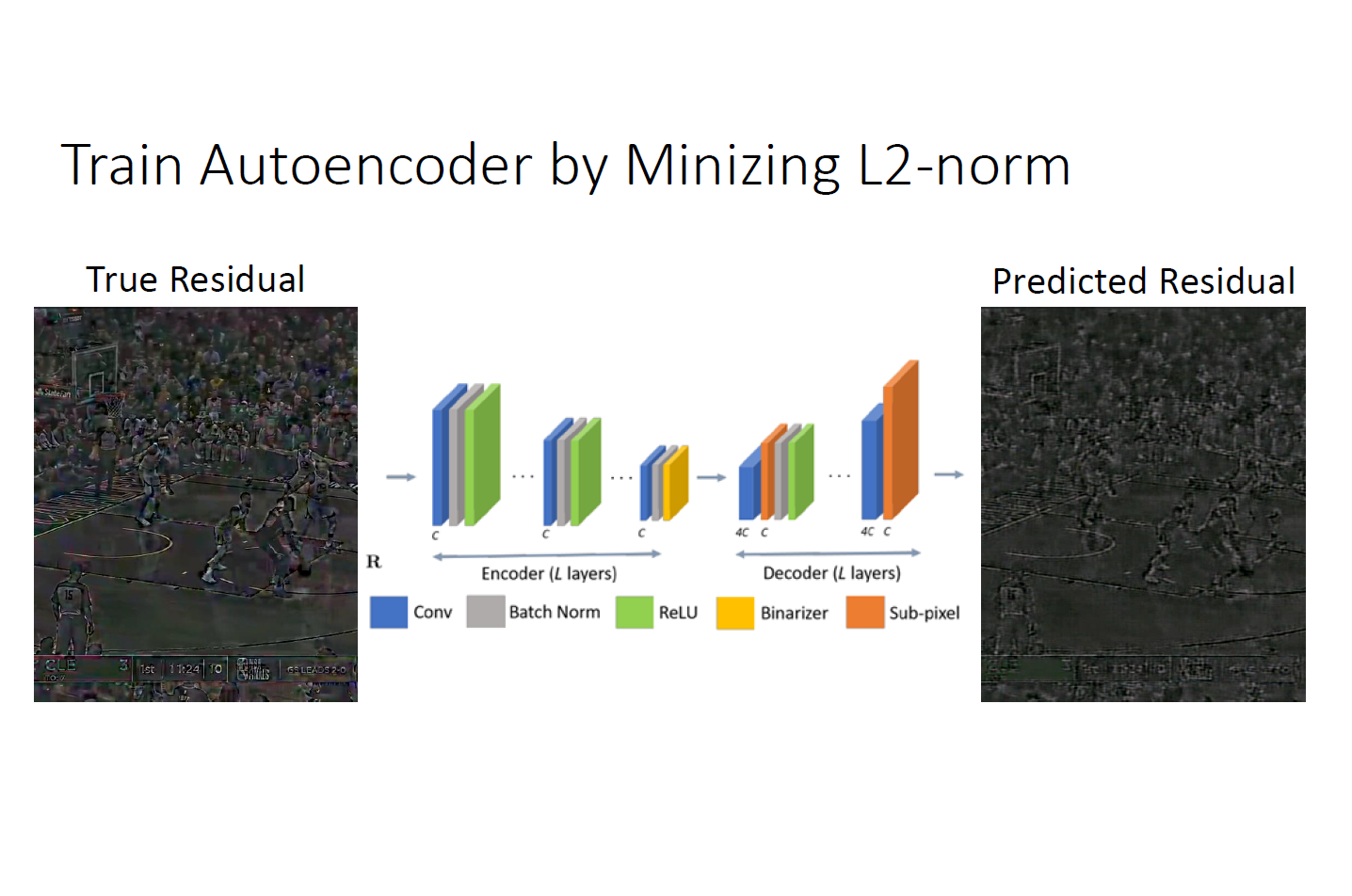

Video Compression via Convolutional Neural Network [slide]

Built a streaming video codec which integrates Convolutional Neural Network Autoencoder and Hu man Coding with H.264 to achieve a higher compression ratio than using the conventional H.264 codec